Within the rapidly changing realm of technology, Elon Musk‘s pursuits are rarely without notice. A few weeks following his urgent appeal to stop training LLMs with capabilities higher than GPT-4, Musk executed his characteristic move: acting completely in opposition to his own advice. Enter Musk’s most recent invention, xAI, and Grok, a brand-new LLM that was unveiled on Saturday.

The project garnered media attention not just because of Musk’s participation but also because of the exclusive group of top AI experts that the business was able to hire from top startups and IT behemoths. While xAI was marketed as an AI that could “understand the world,” it remained enigmatic concerning the “how” and “what” of its workings. up until last week, however.

Is this simply an overhyped chatbot riding the tremendous wave of popularity and hoopla surrounding its predecessors, or is this another typical Musk effort to upset the current quo?

A Steady Flow of Up-to-Date Information

Grok has the ability to revolutionize the gaming since it provides access to the full Twitter content pipeline. Grok will possess a “real-time knowledge of the world,” according to xAI, showcasing what AI experts refer to as Reinforcement Learning from Human Feedback (RLHF), which involves analyzing news and a range of opinions about current affairs.

Grok will get a comprehensive understanding of the world by absorbing Twitter community comments, which are like annotations, and learning about events from several points of view.

Recent research indicates that consumers have already changed the way they look for news, going from social media to conventional media first. This process might be accelerated even more by Grok’s interface with Twitter, which provides users with real-time context, comments, and, if done correctly, real-time fact-checking. As xAI pointed out, Grok’s real-time knowledge function keeps it abreast of global events and enables it to respond with promptness and relevance.

Positive

Fun Mode: Elon’s Dream Made Real

Grok’s so-called “Fun Mode” appears to have realized Elon Musk’s ideal of a fun-loving AI by enabling the LLM to create jokes, respond in a lighthearted but factually correct manner, and give users a whimsical and informal chat experience.

A problem with current LLMs, such as ChatGPT, is that some users think they’ve been too politically correct and sanitized, which can make conversations seem less natural and spontaneous. Some localized LLMs are also not very good at long-term interactions. Grok claims to bridge this gap with its fun mode, which might make it a delightful way to pass the time for people who want to relax.

This idea is not wholly original, as Poe from Quora provides a comparable service with its sophisticated chatbots, each with a distinct personality. But an LLM with Grok’s powers integrated in it elevates the experience to a new plane.

This idea is not wholly original, as Poe from Quora provides a comparable service with its sophisticated chatbots, each with a distinct personality. But an LLM with Grok’s powers integrated in it elevates the experience to a new plane.

Native Internet Access

Grok’s capability to access the internet without the need for a plug-in or additional module is one of its key differentiators.

Its precise browsing capabilities are still unknown, but the concept is intriguing. Imagine an LLM with real-time data cross-referencing capabilities that can increase factual correctness. When combined with its access to Twitter content, Grok has the potential to completely change how people engage with AI as they will always be able to rely on up-to-date, verified information rather than simply pre-existing training data.

Multitasking

According to reports, Grok has the ability to multitask, enabling users to have several chats at once. Users have the option to browse other subjects, wait for a reply on one thread, then move on to another.

Additionally, the chatbot provides conversation branching, which allows users to go deeper into particular subjects without interfering with the main discourse. It also provides a visual guide to all conversation branches, making it simple for users to switch between topics.

A Self-Speaking AI with Little Censorship

Elon Musk had a clear idea for Grok: an AI that doesn’t hesitate to express its thoughts in a digital manner.

Although there are safeguards against damage or false information in place for all significant AI chatbots, they can feel constrictive at times. Users have seen situations in which models like as ChatGPT, Llama, and Claude may withhold their answers, erring on the side of caution in order to prevent offending anybody. This might, however, weed out responses that are innocuous or indeed desired wanted.

Grok is able to respond with greater flexibility, which might make it possible to provide a more genuine and unfettered conversational experience. Grok’s architecture enables it to tackle contentious issues that other AI systems would avoid, as demonstrated by xAI.

It’s clear that this AI provides a special fusion of comedy, accuracy, freedom, and real-time information. But like every invention, there are drawbacks and dangers to take into account.

Negative

Hurried Development and Little Instruction

Some were taken aback by Grok’s quick development right away. In the world of LLMs, two months and 33 billion parameters seem like a drop in the bucket, as stated by xAI: “Grok is still a very early beta product—the best we could do with two months of training.”

The discrepancy in development timetables shows that Grok’s development may have been pushed to ride the AI hype wave. For context, OpenAI has been open about its developmental approach, noting, “We’ve spent six months iteratively aligning GPT-4.”

Furthermore, x.AI doesn’t disclose how much hardware was used to train Grok, thus conjecture is left unchecked.

Everything About the Limits

For those who are unaware, parameters in LLMs stand for the total quantity of data or knowledge that the model is capable of storing. They show the AI’s effective brain capacity, which determines how well it can process and produce information. At first sight, Grok’s 33 billion parameters can seem remarkable.

But in the cutthroat LLM scene, it’s just another participant. Indeed, the number of parameters may not be sufficient to support complex business requirements and the superior outputs that industry leaders such as ChatGPT, Claude, and Bard have established as the benchmark.

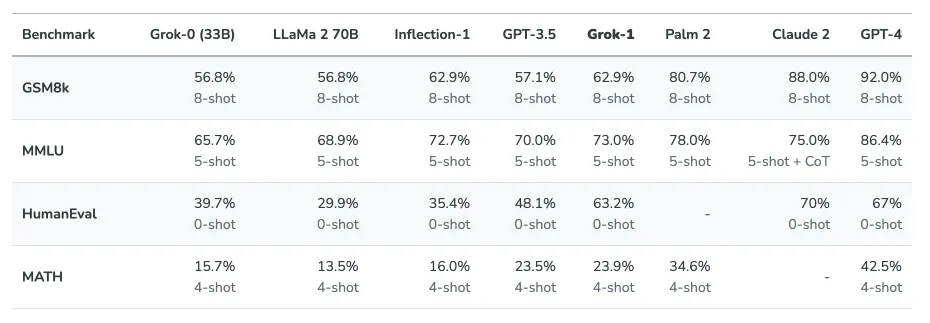

Grok’s low parameter count contributes to its inability to outperform other popular models in important benchmarks such as HumanEval or MMLU:

In addition to parameter count, another concern is context management, or more specifically, the amount of data an AI chatbot can comprehend in a single input. Grok doesn’t have much to offer in this regard. Grok can comprehend 8,192 tokens of context, according to xAI, whereas GPT-4 can handle a staggering 32,000, and Claude can handle up to 100,000 tokens. The new GPT-4 Turbo from OpenAI can achieve a context window of 128,000 tokens.

The Cost of Creativity

Cost is an important consideration in determining any product’s worth, and Grok is no different. Users who are prepared to pay $16 a month for the opportunity of conversing with the chatbot can access it.

Grok could be difficult to market in light of free models like Claude 2 and ChatGPT with GPT-3.5 Turbo, which have already surpassed Grok in several benchmarks and are praised for their accuracy.

Moreover, GPT-4, the strongest LLM available, claims to outperform Grok and has the extra benefits of being powerful, multimodal, and widely accessible.

Is Grok’s launch just a calculated attempt to increase Twitter Blue subscriptions and hence increase Twitter’s income stream?

These worries draw attention to Grok’s difficulties in becoming a significant force in the LLM space. Its drawbacks extend beyond the cost.

Disadvantage

Imitation of Fiction

Choosing to model an LLM after a well-known novel’s fictitious character is unquestionably a creative decision. Although the allure of a fictitious character may be seductive, there are dangers associated with it in a society where precise information is becoming more and more important. When users seek AI assistance for important questions or guidance, they may find themselves at odds with a system meant to mimic a humorous persona.

Furthermore, there’s a risk that users may mistake humorous or sarcastic answers for accurate information as the distinction between fact and fiction becomes increasingly hazy. The consequences of such false beliefs may be extensive in the digital era, when every information is analyzed and shared. Particularly when many languages are involved.

While wit and comedy have their place, it’s important to find a balance, particularly when visitors are looking for insightful information. While comedy may be more entertaining than truth, it also betrays a fundamental flaw in what an LLM should provide: accurate information.

Underdelivered and Overpromised

Due of Elon Musk’s lofty claims regarding Grok, there are extremely high expectations. A closer look indicates a possible discrepancy between the hype and the facts. One important restriction of typical LLM training methods is that they cannot truly get into “super AI” zone since they are limited by their training data.

Comparing Grok’s training to other LLM giants, it appears little with its 33 billion parameters and few months of development. Although the concept of a lighthearted, fictitious personality is intriguing, it may be unrealistic to expect it to provide ground-breaking outcomes when trained using conventional techniques.

Exaggeration is common in the AI community, but given the field’s fast improvements, it’s important for consumers to cut through the hype. Being considered a “super AI” is extremely difficult, and Grok is unlikely to meet the requirements with its current setup and training.

In fact, Elon Musk used Grok’s conversational chatbot as an example of its superiority over a tiny LLM that had been educated in coding. Let’s just say that the battle was unfair.

Misinformation’s Threat

Although LLMs are strong, they are not perfect. Without strict guidelines, differentiating between reality and fiction becomes an impossible undertaking. A number of recent examples, such as chatbots trained on 4chan data or even Microsoft’s Tay, an earlier chatbot that was permitted to communicate on Twitter, serve as warning stories. In addition to spewing hate speech, these bots have successfully passed for genuine people, deceiving a sizable online audience.

This dalliance with false information is not unique. Since Elon took control, Twitter’s reputation has suffered, therefore there could be doubts about Grok’s reliability in providing factual information. Sometimes LLMs have hallucinations, which can have frightening repercussions if these distortions are taken to be true.

Misinformation has the potential to be a ticking time bomb. Users are depending more and more on AI for insights, yet inaccurate information might result in poor decision-making. Grok needs to be cautious so that its lighthearted nature doesn’t distort the facts if it wants to be a reliable ally.

Absence of Multimodal Functionality

In the rapidly evolving field of artificial intelligence, Grok’s text-only method seems archaic. Although Grok charges for its services, consumers may reasonably wonder why they should pay when other LLMs provide more comprehensive, multimodal experiences.

For example, GPT-4-v, which has the capacity to see, hear, and talk, has already made progress in the multimodal area. A comparable feature set is promised by Google’s future Gemini device. In light of this, Grok’s products appear mediocre, which begs more concerns about the company’s value proposition.

There is competition in the market, and consumers are become more picky. Grok must provide something really unique if it hopes to establish a place for itself. As it is, Grok has its job cut out for it since rivals are providing superior features and accuracy—often at no cost.